Our lab focuses on integrating eye-tracking within real-life applications and user experience to observe and quantify user attention, cognitive load, and interaction patterns. By gathering real-time gaze, we can assess usability, measure reading flow, and evaluate how users interact with interfaces.

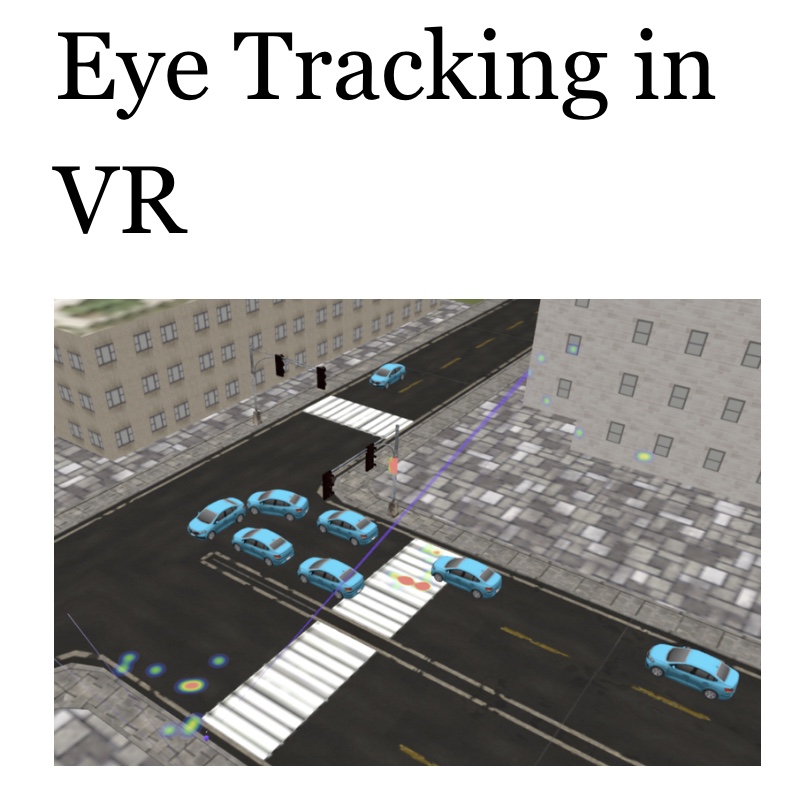

Our lab explores the integration of eye-tracking in virtual reality (VR) to enhance user interaction, performance analysis, and adaptive system design. By capturing real-time gaze data, we gain valuable insights into attention patterns, cognitive load, and decision-making processes within immersive environments. Beyond interaction, our research leverages eye-tracking data to study human behavior in virtual spaces, improving training simulations, situational awareness, and user experience design.

By leveraging real-time eye-tracking data, we focus on usablity evaluation, measuring cognitive load, reading flow, and overall engagement. Through rigorous user testing, we analyze factors such as response accuracy, interface intuitiveness, and personalization effectiveness. By prioritizing user experience, we advance the development of assistive technologies that enhance learning while maintaining a natural and immersive interaction.

We apply AI-based methods to real-time eye-tracking data, using neural networks to model user behavior and adjust system responses. This includes predicting gaze trajectories, distinguishing voluntary from involuntary blinks, and forecasting reading comprehension, thereby enabling more precise and data-driven adaptations for user interaction. For example, SQHCI group has developed Eye-tracking Translation Software (ETS), a system that detects real-time cognitive load to identify challenging words, delivers unobtrusive in-line translations, and preserves immersive reading flow.

Related Selected Publications

- Minas D., Theodosiou E., Roumpas K., Xenos M., “Adaptive Real-Time Translation Assistance Through Eye-Tracking”, Special Issue Machine Learning for HCI: Cases, Trends and Challenges, AI Journal, Volume 6, Issue 1, pp. 5-28, 2025.

DOI: 10.3390/ai6010005 - Vazaios S., Mallas A., Xenos M., “Eye-Tracking Based Automatic Summarization of Lecture Slides”, IEEE ICCA 2023, 5th International Conference on Computer & Applications, Cairo, Egypt, 28-30 November 2023.

DOI: 10.1109/ICCA59364.2023.10401718 - Dritsa S., Mallas A., Xenos M., “Screen Reading Regions in Social Media Comments: An Eye-Tracking Analysis of Visual Attention on Smartphones”, Proceedings of the 27th Panhellenic Conference on Informatics, PCI2023, pp. 95-101, Lamia, Greece, 24-26 November 2023.

DOI: 10.1145/3635059.3635074 - Evangelou S.M., Xenos M., “An Experimental Study of the Relationship Between Static Advertisements Effectiveness and Personality Traits: Using Big-Five, Eye-Tracking, and Interviews”, 25th International Conference on Human-Computer Interaction, LNCS Vol 14039 Springer, pp. 285-299, Copenhagen, Denmark, 23-28 July 2023.

DOI: 10.1007/978-3-031-36049-7_22 - Mallas A., Xenos M., Margosi M., “Users’ Opinions When Drawing with an Eye Tracker”, 25th International Conference on Human-Computer Interaction, LNCS Vol 14020 Springer, pp. 428-439, Copenhagen, Denmark, 23-28 July 2023.

DOI: 10.1007/978-3-031-35681-0_28

Download the information here.