We explore Human-Machine Interaction (HMI) by developing adaptive interfaces that optimize information presentation, using real-time eye-tracking, physiological sensors, and human-drone interaction.

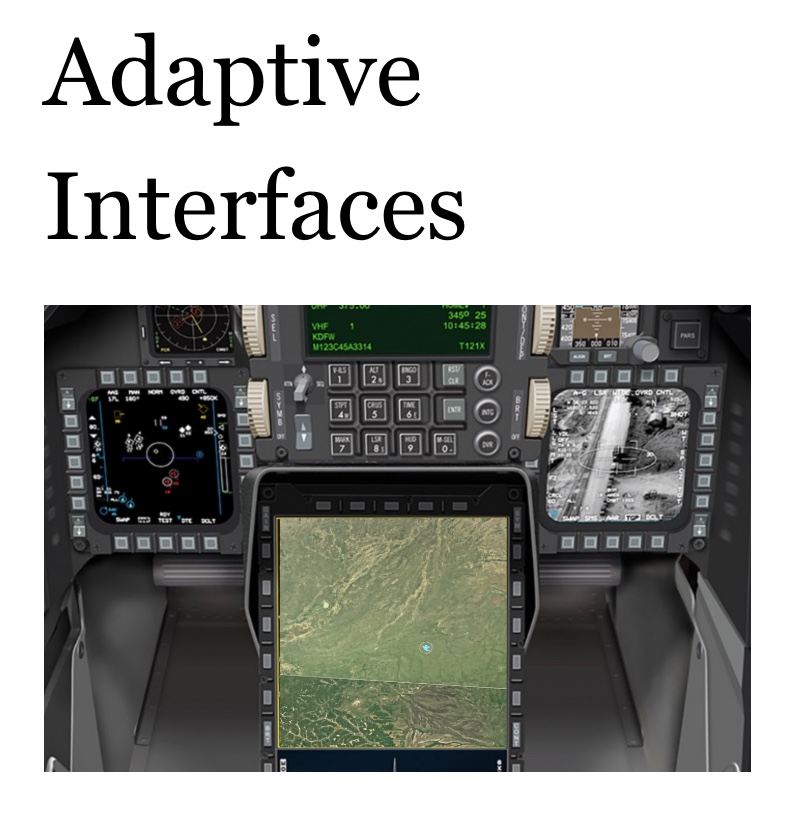

Our lab develops adaptive interface systems that utilize real-time eye-tracking to control information presentation in critical environments. Our dynamic cockpit display, for example, automatically hides symbols once the pilot has viewed them, leaving only essential data visible. This method aims to minimize visual clutter and cognitive load, thereby improving situational awareness and decision-making.

We work on human-drone interaction through drone programming, eye-tracking, and UI improving. Our research focuses on how pilots react to sudden signal interruptions, which turns into actionable UI improvements and training recommendations to ensure safer and more reliable operations for every pilot. We also focus on AI-powered drones by flying around crops and analyzing the recorded video with a machine learning algorithm, in order to detect key indicators of plant health with high precision.

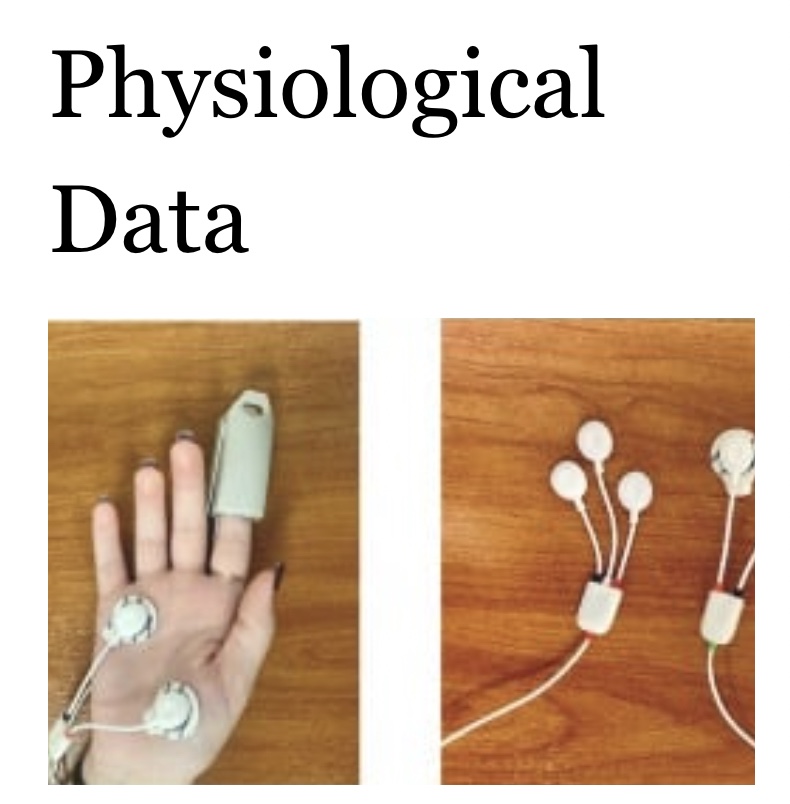

Our lab utilizes advanced physiological sensors to measure users’ stress levels during interactions, providing valuable insights into how different conditions affect the human body. Using tools like OpenSignals, we collect and analyze data from sensors such as EDA (electrodermal activity), ECG (electrocardiography), and BVP (blood-volume pulse). These sensors help us understand physiological responses and assess the impact of various stimuli on users’ stress levels, paving the way for more personalized and effective user experiences in human-computer interactions.

Related Selected Publications

- Minas D., Theodosiou E., Roumpas K., Xenos M., “Adaptive Real-Time Translation Assistance Through Eye-Tracking”, Special Issue Machine Learning for HCI: Cases, Trends and Challenges, AI Journal, Volume 6, Issue 1, pp. 5-28, 2025.

DOI: 10.3390/ai6010005 - Mallas A., Papadatou H., Xenos M., “Maintaining Text Legibility Regarding Font Size Based on User Distance in Mobile Devices: Application Development and User Evaluation”, ISM 2023, 25th IEEE International Symposium on Multimedia, Laguna Hills, California, 11-13 December 2023.

DOI: 10.1109/ISM59092.2023.00051 - Vazaios S., Mallas A., Xenos M., “Eye-Tracking Based Automatic Summarization of Lecture Slides”, IEEE ICCA 2023, 5th International Conference on Computer & Applications, Cairo, Egypt, 28-30 November 2023.

DOI: 10.1109/ICCA59364.2023.10401718 - Vrettis P., Mallas A., Xenos M., “An Affect-Aware Game Adapting to Human Emotion”, Proceedings of the 6th International Conference, HCI-Games 2024, Held as Part of the 26th HCI International Conference, HCII 2024, pp. 307-322, Washington, DC, USA, June 29 – July 4, 2024.

DOI: 10.1007/978-3-031-60692-2_21

Best paper award for the 6th HCI-Games track of the HCII 2024

Download the information here.